Bolstering Claude Code: A Personal Development Environment Configuration Guide

Introduction This guide walks through my personal Claude Code configuration, enhanced with multiple Model Context Protocol (MCP) servers that integrate with various tools and data sources. While this represents my specific setup, it’s designed to be adaptable for any developer who wants to boost their AI-assisted development workflows. Yes, it was authored largely by Claude, but reviewed by me. What You’ll Learn How to configure Claude Code for enterprise use Setting up essential MCPs (Memory, Filesystem, Tavily) Integrating enterprise-specific MCPs (Atlassian, cloud cost management) Practical examples of using MCPs together for real workflows My dotfiles and configuration management approach with YADM Prerequisites Claude Code installed (official installation guide) Basic terminal/command-line proficiency Node.js/npm installed (for some MCPs) Part 1: Claude Code Installation & Configuration Installing Claude Code # Install Claude Code via npm npm install -g @anthropic-ai/claude-code If you’re using an enterprise LLM gateway or proxy, add the following to your shell configuration (e.g., ~/.zshrc): ...

GPS III: Where Are We? And Where Are We Going? [Archive]

Archival Note: This post preserves an article I originally wrote for MakeUseOf way back in August 2014 while I was at The University of Liverpool doing my PhD. The original published version is at MakeUseOf: GPS III: Where Are We? And Where Are We Going?. They probably still own everything but this is from my original ‘manuscript’. Fifteen years ago, the first GPS-enabled cellphone went on the market. It flopped, but the form factor it pioneered, combining near-military grade communications equipment with a consumer device has stayed with us. ...

Notes from "Will GenAI Revolutionise our Lives for the Good?"

I was fortunate enough to be invited to participate in a debate raising money for Farset Labs, a cause obviously close to my cold cynical heart. Will GenAI Revolutionise Our Lives For The Good In The Next 5 Years is top tier troll-bait from Garth and Art, and I’m very grateful to have shared the stage with the 5 other speakers. Even the lanky english one. I was particularly impressed by my teammates in their very human-led approach to this question (although everyone was great!) ...

Being a DORC in the age of Generative AI

Lots of people have written about the impact of generative AI on the world of software engineering, and while I write this I’m fighting with CoPilot to stop filling out the rest of the sentence. Gimme a second… … That’s better. Anyway. This is just a blurb/brain-dump of a shower-thought. Don’t come to me for deep insightful stuff about the productivity gains about Generative AI in Software Development, or whether it will be the end of ‘Juniors’ in software engineering, or how we’re going to grow juniors in future. ...

On Deepseek

Note: The continuing adventures of ‘a dozen people asked what I thought about a new AI model in work so I put them together and republished it a few months later when I got a quiet weekend’… So, Deepseek stripped billions from the market on Monday. Do we care? My 2c is that this is a fantastic series of innovations on the core design of LLMs, and based on those innovations, I wouldn’t be surprised if the training costs quoted as being in the mid-to-high-single-digit-millions-of-dollars are around the right order of magnitude for this (assuming you already had the team expertise of a PhD fueled quant-hedge fund in house and didn’t pay them SV salaries). ...

AIOps Maturity Model

Introduction This document outlines an AIOps Maturity Model to help organizations assess and improve their Machine Learning Operations capabilities. It came from my own frustration that there weren’t any models that fit the real experience of end-to-end data science and operations relationships that covered both ‘conventional’ ML, and practically discussing LLM based systems and how completly differently you have to think about them. This was originally published internally around May ‘24 and then presented at NIDC as an ‘Eye Test Model’, and I promised that I’d eventualy publish it; this is it, dusted off and tidied up for public consumption. ...

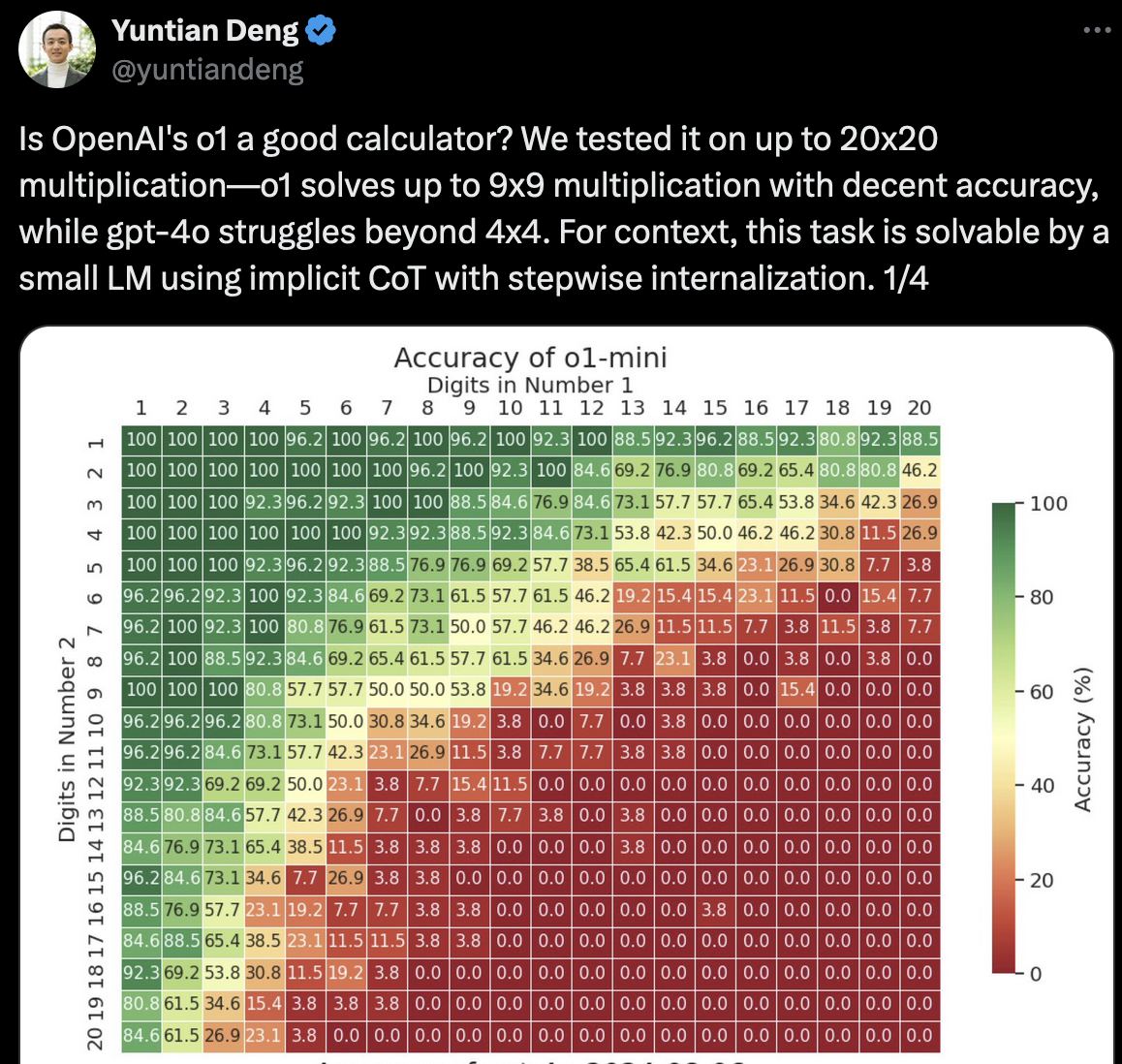

On OpenAI o1

Is LLM Smarter than a 12 year old? Had a few people ask about the o1 models; at work we’ve requested preview access from Microsoft to get them added to our internal LLM Gateway, so we’ll just wait and see, but there’s been some interesting discourse on it so far. My 2c is that this is OpenAI trying to take the chain-of-thought (aka ’talking to yourself’) in house rather than people doing the intermediate steps themselves. (That means, instead of just running the token prediction, it’s a repeated conversation with itself, with OpenAI providing the ‘inner monologe’ and just magically popping out the answer). This is fine in principal, and is how we do multi-shot RAG among other things, but the two(three) critical parts of this for me are ...

"Context all the way down": Primer on methods of Experience injection for LLMs

Much hay has been made that LLM’s can be infinitely trained on infinite data to do infinite jobs, in an approach generally described as ‘LLM Maximalism’. This post is a bit of a braindump to explain my thought process in how to practically use LLMs in a safe way in production/client facing environments, with a little bit of a discussion as to where I see the current blockers to this in most organisations, and where organisations should be focusing investment to be able to meet these challenges without loosing their competitive edge/expertise. ...

Farewell Farset

Today, I’m no longer the Treasurer of Farset Labs, and in the next few days, I’ll officially have left the board of trustees of the charity that I helped form over 13 years ago. Farset Labs started as a Google Group that I started in 2010. It took until 2012 to get our act together, along with some entertaining hackathons riding on the backs of our friends at Dragonslayers. Over the past decade-and-a-bit, I’d easily say that Farset Labs has been the keystone of my life and my career, and I’m pretty sure that (before the renovations in 2019) you’d find my blood, sweat, and definitely tears staining various parts of the building. ...

Generative AI: Impact on Software Development and Security

This was a piece written as part of my work at Synopsys SIG and was published in a few places, but I liked it and wanted to keep it… At least until the lawyers chase me down. Since the release of ChatGPT, the technology industry has been scrambling to establish and operationalise the practical implications of these human-level conversational interfaces. Now, almost every major organisation is connecting their internal or product documentation to a large language model (LLM) to enable rapid question-answering, and some are starting to wade into the use of generative AI systems to aid in the design and creation of new technical solutions, be it in marketing content, web application code or chip design. But the hype has had its sharp edges as well; the word ‘hallucination’ is never far from the lips of anyone discussing chatbots, and the assumptions that people have around human-like language being equivalent to ‘common sense’ have been seriously challenged. Potential users of LLM derived systems would be wise to take an optimistic but pragmatic approach. The release of the first major public Large Language Model (LLM) set off successive waves of amazement, intrigue and often, fear, on the part of a public unprepared for the surprisingly ‘human’ behaviour of this ‘chatbot’. It appeared to communicate with intentionality, with consideration, and with a distinctively ‘natural human’ voice. Over successive chat-enquiries, it was able to ‘remember’ its own answers to previous questions, enabling users to build up coherent and seemingly complex conversations, and to attempt to answer surprisingly ‘deep’ questions. Yet, these systems should be treated as one would treat a child savant; it might know all the right words in the right order but may not really have the experience or critical thinking to evaluate its own view of the world; the outputs of these systems have not ‘earned’ our institutional trust, and care must be taken in leveraging these systems without significant oversight. ...