This was a piece written as part of my work at Synopsys SIG and was published in a few places, but I liked it and wanted to keep it… At least until the lawyers chase me down.

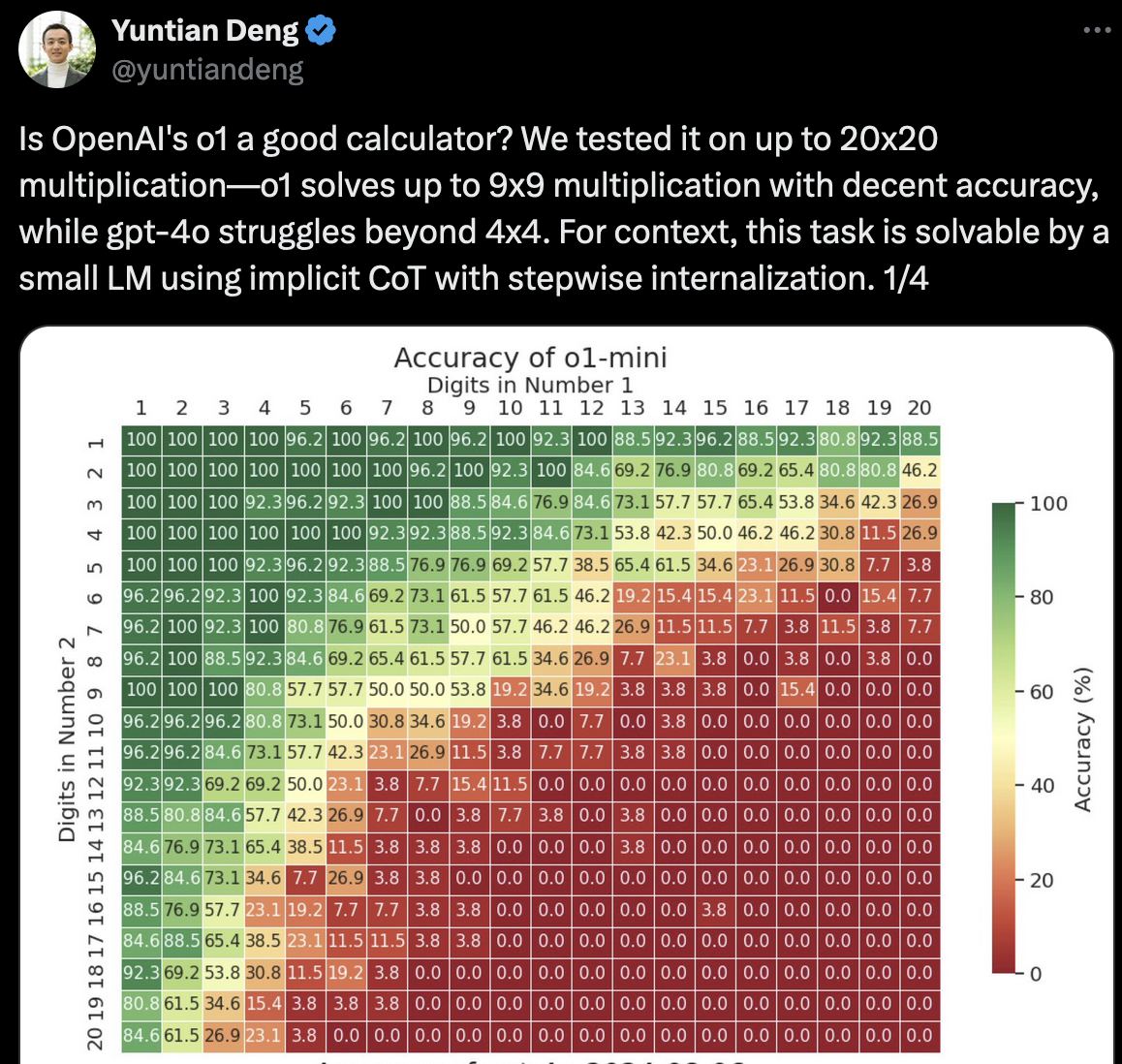

Since the release of ChatGPT, the technology industry has been scrambling to establish and operationalise the practical implications of these human-level conversational interfaces. Now, almost every major organisation is connecting their internal or product documentation to a large language model (LLM) to enable rapid question-answering, and some are starting to wade into the use of generative AI systems to aid in the design and creation of new technical solutions, be it in marketing content, web application code or chip design. But the hype has had its sharp edges as well; the word ‘hallucination’ is never far from the lips of anyone discussing chatbots, and the assumptions that people have around human-like language being equivalent to ‘common sense’ have been seriously challenged. Potential users of LLM derived systems would be wise to take an optimistic but pragmatic approach. The release of the first major public Large Language Model (LLM) set off successive waves of amazement, intrigue and often, fear, on the part of a public unprepared for the surprisingly ‘human’ behaviour of this ‘chatbot’. It appeared to communicate with intentionality, with consideration, and with a distinctively ‘natural human’ voice. Over successive chat-enquiries, it was able to ‘remember’ its own answers to previous questions, enabling users to build up coherent and seemingly complex conversations, and to attempt to answer surprisingly ‘deep’ questions. Yet, these systems should be treated as one would treat a child savant; it might know all the right words in the right order but may not really have the experience or critical thinking to evaluate its own view of the world; the outputs of these systems have not ‘earned’ our institutional trust, and care must be taken in leveraging these systems without significant oversight.

...